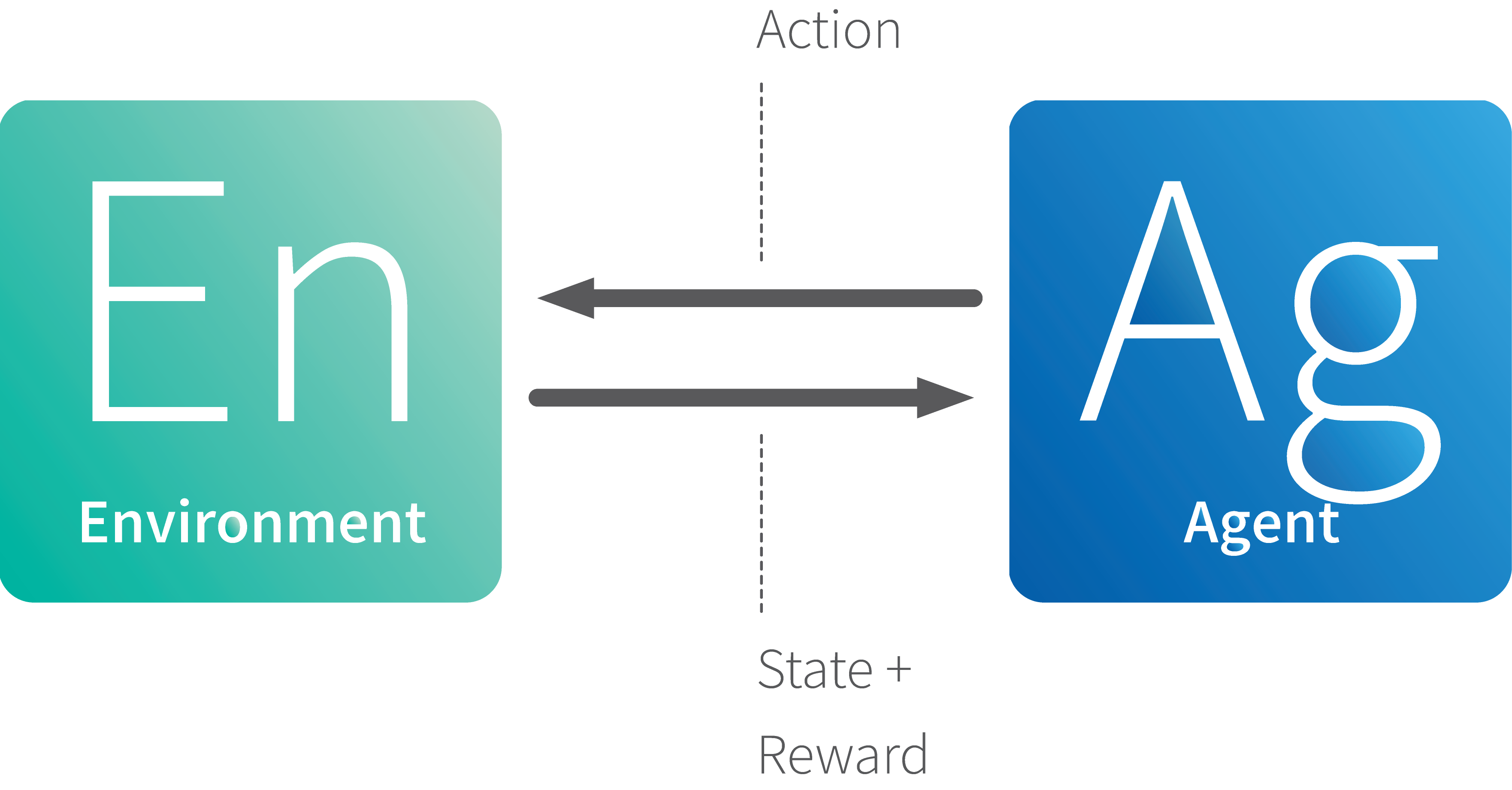

Reinforcement learning (RL) is a popular type of machine learning that allows an agent to interact with an environment. Rather than being told if its actions are correct or incorrect, it is only provided a (possibly time delayed and stochastic) reward signal that it seeks to maximize. The RL framework allows agents to learn how to play video games, control robots, and optimize data centers.

Although RL has had many successes, often significant amounts of data are needed before achieving high-quality performance. In this paper we consider two distinct settings. The first setting assumes the presence of a human who can provide demonstrations (e.g., play a video game). These demonstrations can be used to change the agent’s action selection method. For example, rather than the normal “explore vs. exploit” tradeoff, we add an additional “execute” choice. Now the agent can choose between taking a random action (explore), taking the action it thinks is best based on its Q-values (exploit), and taking the action it thinks the demonstrator would have taken (execute). Initially, the probability of execute will be high, attempting to mimic the human, but over time this probability will be decreased so that the agent’s learned knowledge can allow it to outperform the human demonstrator. Another way the demonstration can be used is to use inverse reinforcement learning so that the agent can learn a potential-based shaping function. This shaping reward is guaranteed not to change the optimal policy but can allow the agent to learn much faster. In both cases, just a few minutes of human demonstration can have a dramatic effect on the total performance, and the agent can learn to outperform the human.

For the second setting, consider the case where no environmental reward signal is defined, but a human trainer can provide positive and negative feedback. If a human can train a dog, why not build algorithms to let them train an agent? Our work presents algorithms that allow non-technical human trainers to teach both simple concepts (i.e., a contextual multi-armed bandit) as well as more complex concepts like “bring the white chair to the purple room.” In particular, we treat human feedback as categorical, rather than numeric. Our Bayesian reasoning algorithm then maximizes the likelihood of the human’s desired policy, based on the history of feedback it has received.

While encouraging, we believe that there are many additional ways agents can leverage the knowledge and biases of non-technical users. My International Joint Conferences on Artificial Intelligence (IJCAI) Early Career Talk will take place in Stockholm on July 17th and will discuss the work summarized in this post. The paper this blog post was based on, which is also the basis of my talk, can be found at Publications – RBC Borealis.