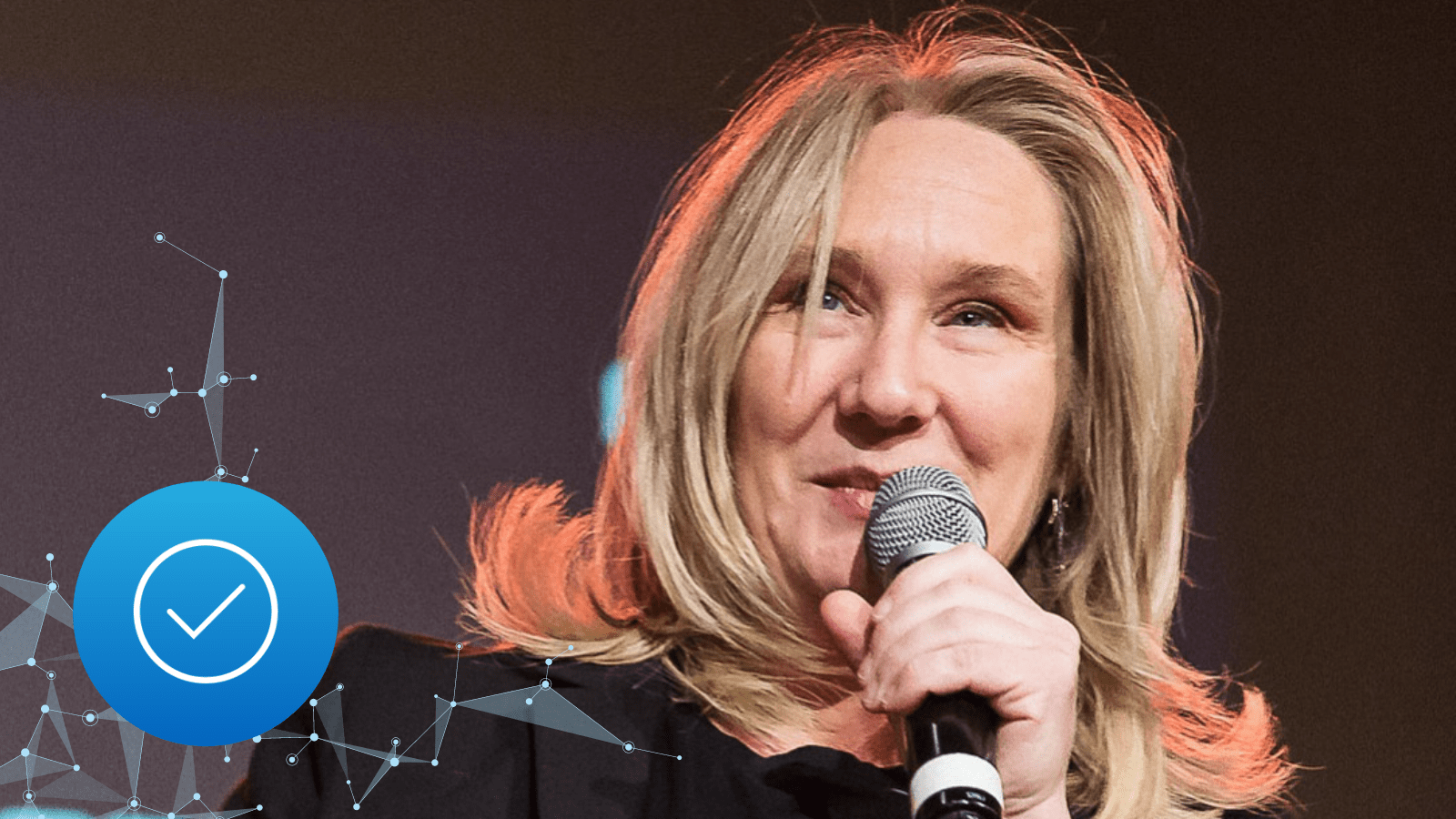

In this post, we explore the concept of social equality in AI with Valentine Goddard, Founder and Executive Director of AI Impact Alliance.

The views expressed in this article are those of the interviewee and do not necessarily reflect the position of RBC or RBC Borealis.

What are people most concerned about when it comes to the social impact of AI?

Valentine Goddard (VG):

The debate about the social impacts of AI have evolved as technology has evolved.

However, the pandemic is rapidly increasing the Digital Gap which is often linked to socioeconomic inequities. The conversation has shifted from an overemphasis on the economic efficiencies of AI to one about social resilience in the age of AI. We’re facing a historical opportunity to look into what kind of New Normal we want.

What can we do to improve awareness of the social impacts of AI?

VG:

To start, I think diversity is key. We need to improve the diversity of perspectives that go into the development of AI and data analytics. I think awareness about the importance of this is slowly increasing.

Meanwhile, we’re also seeing social issues rise up the corporate agenda. An increasing number of organizations have created roles responsible for AI Ethics, but there is still much debate about what that means exactly.

I’m also encouraged by efforts to ensure that developers and employees are able to raise concerns about the fairness of the algorithms they are creating. Though we still encounter businesses that can improve when it comes to properly supporting their applied research teams in this area.

How can businesses do better?

VG:

I have noticed an increasing desire on the part of businesses to participate in ‘AI for Good’ or ‘Data for Good’ initiatives. These are great first step towards improving awareness around the social impacts of AI, but they are often just ‘one-offs’. What we need is a more sustainable approach.

That is why I have been advocating for greater partnership between the AI ecosystem and Civil Society Organization’s (CSOs) as a way to drive participation and fairness in the development and implementation of AI. It will likely require more funding for CSOs as they move up the AI maturity curve, but this is not just about funding.

For those at the leading edge of AI development and governance, the inclusion of CSOs can deliver massive benefits – they can help bring diversity of perspective (seven in ten employees at socially focused non-profits are women); they can help socialize the wider benefits of AI within their communities (often those most underserved by technology), and they can help identify emerging social issues related to the use of AI (and maybe even help solve them before they become problems).

There is also a growing body of research on the value of including CSOs in business decision-making. Academics suggest it can increase trust and accelerate the responsible adoption of new technologies. Others stress the value inherent in working with CSOs to collect relevant, high-quality data that can lead to more robust and socially-beneficial results. Many simply highlight the need to include more democratic processes in regulatory innovation.

What types of CSOs should be involved?

VG:

That largely depends on the sector you are working in and the stakeholders you touch, but the net should be cast wide. Before the pandemic disrupted our normal lives, my organization – AI Impact Alliance –conducted workshops throughout the year that lead to the adoption of international public policy recommendations on the role of civil society, the Arts, in digital governance by the United Nations. It’s essential to work towards more inclusive AI policy recommendations.

How can executives and developers tell if they are making a positive social impact?

VG:

Two approaches are gaining greater adoption in the market. The first is to conduct ongoing social impact assessments on the AI models and technologies you are developing and implementing. There is no single guide to what goes into a social impact assessment, so companies will need to work with stakeholders and others to define what standards and KPIs they will measure.

We are also seeing greater adoption of social return on investment criteria, both within decision-making and in corporate reporting. Again, the standards vary depending on the sector and market. But they can be a useful tool for measuring progress.

What role should public institutions play?

VG:

I would argue that public institutions need to take a lead role in supporting education and digital literacy – particularly around the ethical, social, legal, economic and political implications of AI. They need to be encouraging the adoption of democratic AI processes and normative frameworks. They need to be tackling the roots of the digital divide. And they need to be supporting new forms of democratic participation and civic engagement in the field of AI.

The government can also play a role in incentivising businesses for responsible behaviour. They could take a heavy hand-making social impact assessments compulsory, for example – or they could take a more collaborative approach by ensuring the right stakeholders are at the table and that social return on investment is recognized and valued.

What can the AI community do?

VG:

At an individual level, we’ve enjoyed tremendous support from developers, researchers and scientists who want to contribute to the debate about the impact of AI on society. I have also seen AI developers and researchers donate their time directly to CSOs to help data literacy and management capacity.

I think more broadly, the AI community needs to continue to focus on addressing the root causes of digital inequality. I think we need to be aware of how the models we are developing for the digital economy can sometimes become a driver of ethical problems. And we need to be more supported when we see problems emerging.

I think the AI community wants to build socially responsible models and technologies. They just need the tools, frameworks and encouragement to go do it.

About Valentine Goddard

Valentine Goddard is the founder and executive director of AI Impact Alliance, an independent non-profit organization operating globally, whose mission is to facilitate an ethical and responsible implementation of artificial intelligence. She is a member of the United Nations Expert Groups on The Role of Public Institutions in the Transformative Impact of New Technologies, and on a “Socially just transition towards sustainable development: The role of digital technologies on social development and well-being of all”. Ms. Goddard sits on several committees related to the ethical and social impact of AI and contributes to public policy recommendations related to the ethical and normative framework of AI.

News