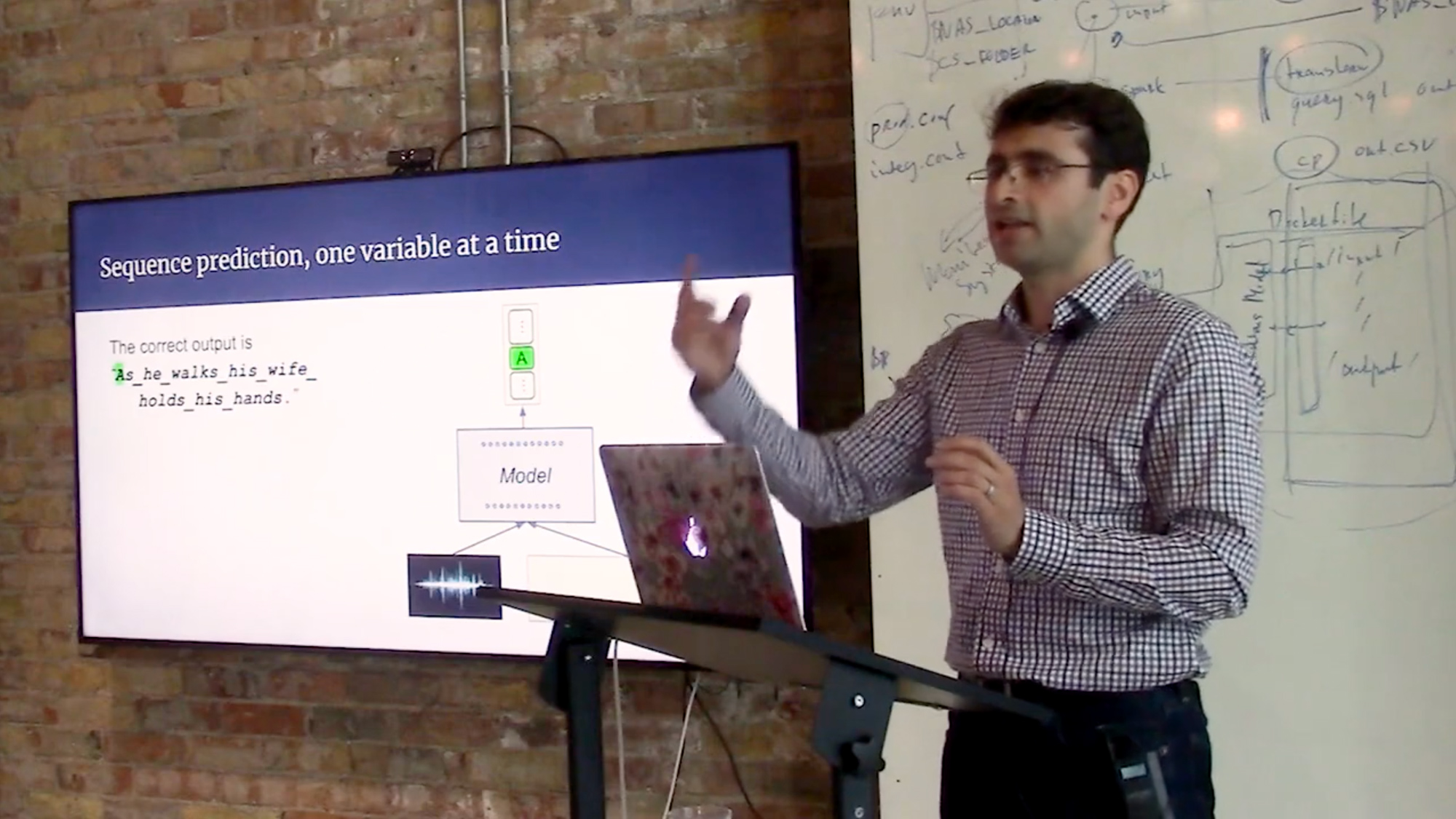

Neural sequence models have seen remarkable success across sequence prediction tasks including machine translation and speech recognition. I will give an overview of the predominant approach to supervised neural sequence models known as teacher forcing. Then, I will present optimal completion distillation (OCD), which improves upon teacher forcing by training a sequence model on its own mistakes. Given a partial sequence generated by the model, we find the optimal completion in terms of the total edit distance and teach the model to mimic such optimal completions as much as possible. OCD achieves the state-of-the-art on speech recognition on the WSJ dataset. In the second half of the talk, I will focus on sequence prediction tasks that involve discovering latent programs as part of the optimization. I will present our approach called memory augmented policy optimization (MAPO) that improves upon REINFORCE by expressing the expected return objective as a weighted sum of two terms: an expectation over a memory of trajectories with high rewards, and a separate expectation over the trajectories outside of the memory. MAPO achieves the state-of-the-art on the challenging WikiTableQuestions dataset.

News

Kathryn Hume joins RBC Borealis leadership team

News

Meet the Class of 2019: Introducing RBC Borealis’s Graduate Fellowship winners

News