Since the early days of the internet, Mozilla has been championing a more open, inclusive and accessible internet. Now the organization is focusing their sights on trustworthy AI. In this article, we talk with Mark Surman, President and Executive Director of Mozilla, about his organization’s focus and the importance of using open-source approaches.

The views expressed in this article are those of the interviewee and do not necessarily reflect the position of RBC or RBC Borealis.

Tell us about Mozilla and your organization’s interest in AI.

Since the beginning, Mozilla’s entire purpose has been to make sure that the digital world is good for people. A lot of our focus has been on making it more open, inclusive and accessible to all. Over the past two years, we’ve increasingly focused on working towards making AI more trustworthy – where humans have control over it and where people who abuse it are held accountable.

I think what’s going on today isn’t all that different from what happened when the internet was widely commercialized about 25 years ago. That unlocked a massive wave of digital transformation. And a lot of great things came from that. But also a lot of challenges, particularly related to power. It opened up a lot of questions about who was in control and who had responsibility.

AI is doing the same thing today. It is changing how we work and how we interact with each other. And it is raising a lot of the same questions about power. We feel that – as companies like OpenAI and Google push forward with new products and services based on AI – there must also be people looking at how we ensure those products are developed responsibly, with humans in control.

How do open-source models and approaches help to create solutions to these big problems?

There are two big reasons why open source needs to be part of the solution. The first is collaboration. Open source is a proven way to solve hard problems, bringing together people with various capabilities to collaborate without all being at the same company. With open source, you get academics, the public sector, the private sector, students and NGOs all working together to solve really difficult problems.

The second reason is that open source often lends itself to public interest solutions. So when you think about solving problems like transparency or interpretability of these big models, open source approaches offer ways for us to coordinate that work and ensure it is aligned with the broader interest and not controlled by one particular set of commercial interests.

How do you think Mozilla can help drive positive action on these issues?

We really want to move the needle on some of the key issues. One of the things we are focused on is transparency and interpretability. We believe those are areas where we need pressure from public interests because – frankly – it won’t crack the problem without that. There needs to be a public driver for that kind of research.

One interesting field of research is around what sometimes is called a Personal AI – essentially an AI that represents me as a person. The reality is that we’re all cognitively outgunned by these systems every minute of every day. And all of the systems we interact with tend to represent the interest of whoever runs them. We think there is room for systems whose only purpose is to make sure our interests are represented in all of the automated transactions we conduct every day.

At Mozilla, we use a range of techniques to drive action – things like technology research, product policy, digital literacy and so on. On things like Personal AI, we start with investing in research on the topic. That brings us to topics like human agency, data providence and data governance. But then we quickly try to turn that into products that represent human values.

You recently launched the Trustworthy AI Challenge. How will that help contribute to the solutions?

The Trustworthy AI Challenge is a recognition that we just can’t solve these problems on our own. I don’t think any one organization or group can. It has to be a collaborative effort to ensure the interests of humans and humanity are being taken into account. So the Trustworthy AI Challenge is about inviting people who have ideas about how to use AI to advance the values in the Mozilla Manifesto to put them forward to compete for a prize.

We also recently launched Mozilla Ventures, which aims to support companies who are trying to crack the code on trustworthy AI. We want to help those companies be successful. So, where it makes sense, we are investing in them.

What is your call to action for the industry?

Wherever you sit in the AI ecosystem – whether you are a user of digital systems (which is everybody, by the way), a core deep researcher or a worker in the government or private sector – there really is an imperative to be asking these questions about how we want this stuff to work and how we make sure it works in our individual and collective interest. We’re all going to need to work together if we want to move these topics in the right direction.

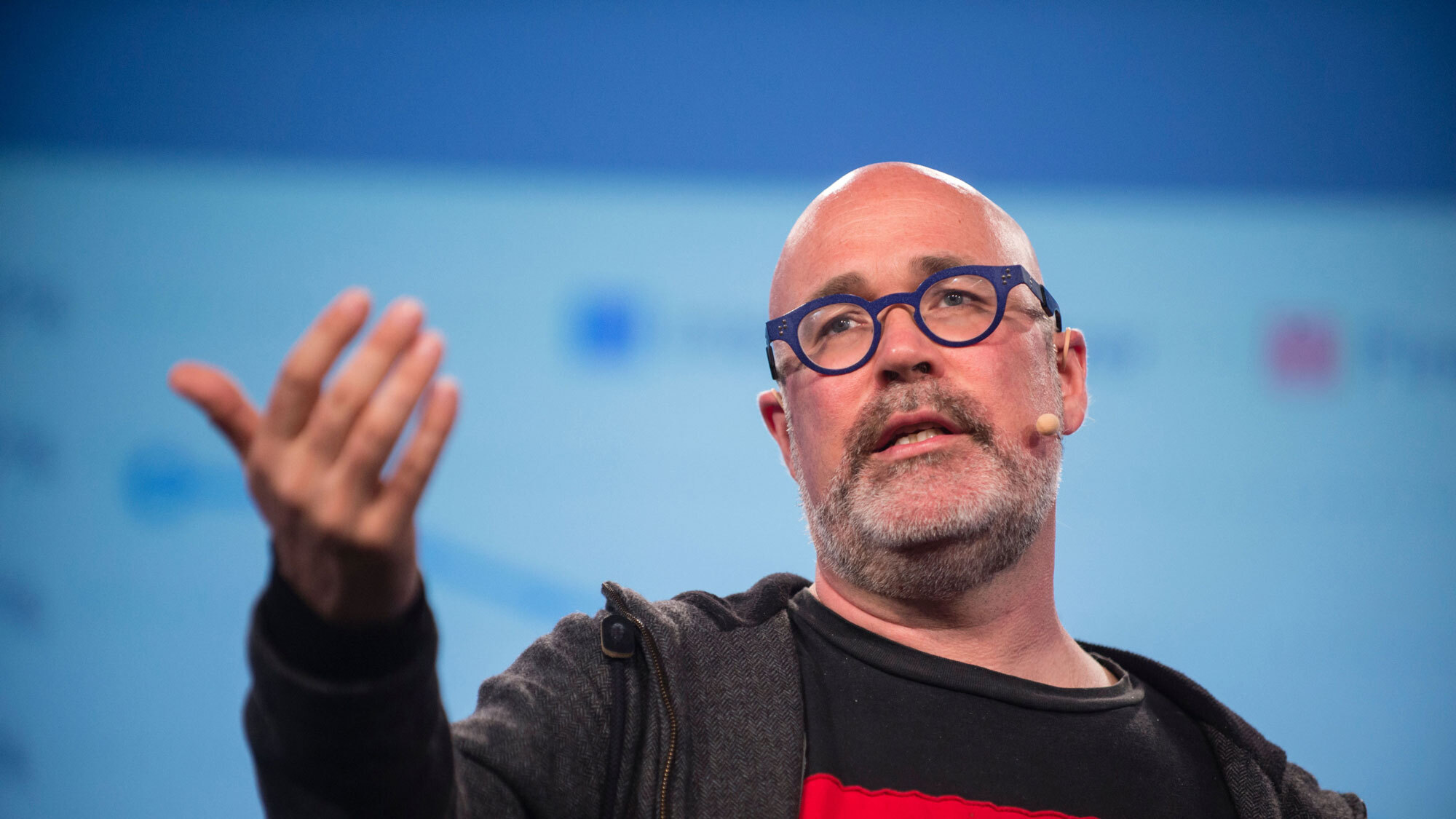

About Mark Surman

Mark is President and Executive Director of Mozilla, a global nonprofit that does everything from making Firefox to standing up for issues like online privacy. Prior to Mozilla, Mark spent 15 years leading orgs and projects promoting the use of the internet and open source for social empowerment. Mark’s current focus is on Mozilla’s efforts to champion more trustworthy AI in the tech industry.

Advancing Responsible AI

Responsible AI is key to the future of AI. We have launched RESPECT AI hub to share knowledge, algorithms, and tooling to help advance responsible AI.

Visit RESPECT AI hubNews