I was lucky enough to attend this year’s ICML conference in Stockholm, Sweden from July 10-15. Below, I discuss three talks that caught my attention among this year’s key scholarship.

Paper: “This looks like that: deep learning for interpretable image recognition”

Authors: Chaofan Chen, Oscar Li, Alina Barnett, Jonathan Su, Cynthia Rudin

Presenter: Cynthia Rudin

The aptly titled paper, “This looks like that,” involves learning “prototypes” that correspond to common features characterizing object categories. The output of such a classifier is the object category and a set of prototypes that closely matches the sample image. The title-motivating interpretation is that this input image is that particular category because it looks like these other canonical images from that category.

This paper interested me because it was something new; specifically, a new application category that can be demonstrated on a small dataset. Digging deeper, you can see there is some technical machinery involved in figuring out an appropriate cost function for training the parameters of the model. But the key innovation here is the new application category. It’s only recently that we’ve had access to this set of fully parallel computing architectures, and to me, artificial intelligence is about figuring out what to do with all these parallel computing machines. What we end up doing with them is still totally up in the air and fills me with promise for the field.

One weakness, however, is the authors’ example prototypes. The authors claim their technique mimics the type of analysis performed by a specialist in explaining why certain objects fall within certain categories. In the supplementary materials, for example, we see an automatically classified image of a bird labelled with what is clearly its beak, upper tail, and lower tail. As an analogy, the author shows a human-labelled image of a bird with similarly named components. This is the strongest example in the paper of a set of components that are “interpretable.” Their idea is that the way a human would explain an image of a bird is by pointing to different parts of the object and suggesting “this is an image of a bird because it contains these essential features of a bird.” However, the other examples given, that of a truck placed beside multiple images of vehicles, for instance, don’t seem to have the same level of interpretability; they just look like other pictures of vehicles and not “prototypical parts of images from a given class” as suggested in the paper’s introduction. This is, of course, a somewhat subjective criticism: figuring out how to make this more objective seems to be an area of future research.

Overall, I found it notable that this paper only tested the algorithm on CIFAR 10 and 10 classes from CUB-200-2011 (two small datasets), which conceptually may be seen as limiting the scope, but it presented a novel application that was very well received by the audience.

Paper: Robust Physical-World Attacks on Deep Learning Visual Classification

Authors: Kevin Eykholt, Ivan Evtimov, Earlence Fernandes, Bo Li, Amir Rahmati, Chaowei Xiao, Atul Prakash, Tadayoshi Kohno, Dawn Song

Presenter: Dawn Song

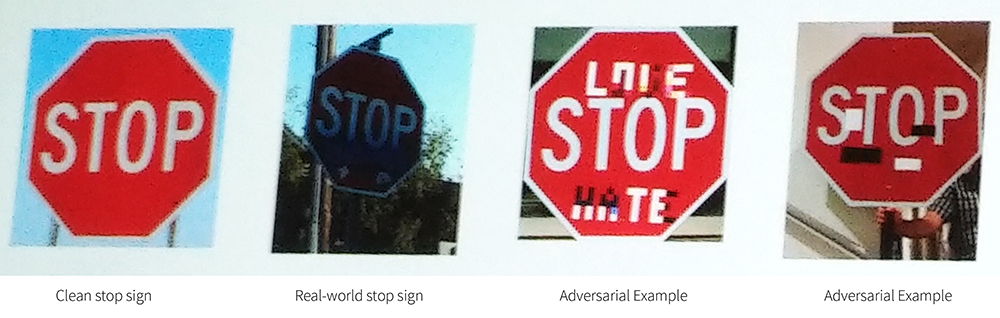

In this talk, Dawn Song presented work on what she and her fellow authors call “Robust Physical Perturbations”. The perturbations in this case are part of the adversarial attacks whose purpose is to illuminate weaknesses in or outright break existing classifying techniques. In this case, Dawn showed a video of a stop sign with a type of physical altercation resembling graffiti, then demonstrated how the stop sign was subsequently misinterpreted as a speed limit sign reading “45 mph.” In addition to this problem, the stop sign was misclassified as a speed limit sign from many different angles and distances throughout the duration of the video. These errors have obvious safety implications, which I will examine below:

- There was a concrete connection to a real engineering problem – the autonomous vehicle safety problem. For instance, a nefarious actor could decide to vandalize stop signs so they’d appear as real stop signs to a human observer, but they’d be classified as something that doesn’t require stopping and could cause an accident.

- The authors identified another interesting constraint: that the attack had to look like what we consider “normal” graffiti so the vandalism wouldn’t appear suspicious to a human observer. If the graffiti looks fake, it’s easier for a human to recognize these real-world inconsistencies and then identify the situation as an attempted adversarial attack on the classifier, thus motivating the human to fix the sign sooner. To demonstrate this point, the authors show some pictures of their proposed adversarial attacks: one of the attacks appears to be the words LOVE-HATE applied to the stop sign that was successful in causing a misclassification. To me this example looked like real-life graffiti that is occasionally observed on stop signs.

When you dig more deeply into the paper, it’s clear that the authors address the second point by using a masking technique. In this version, the authors perturb an input image to create an adversarial example, then mask small perturbations to zero so that the end result requires only small portions of the image to be perturbed in order to construct the adversarial example (although in this case, the small portion of the image that gets perturbed is perturbed rather significantly). This method is distinguishable from some of the other adversarial techniques used where each pixel is restricted to be perturbed by only a small amount. In this case, a small fraction of pixels are perturbed by a large amount.

There are several rules of thumb as well as questions I can draw from these adversarial examples:

- If an attacker is aware of the internal parameters of the model being attacked, then it is much easier to construct an adversarial example. The authors assume the internal parameters are known, and this is probably reasonable because of the possibility of black box extraction attacks; that is, a set of techniques like those introduced by Ristenpart et al. that allow an adversary to estimate the weights of a neural network by just performing black box queries of the model.

- You should ground the analysis in real-world costs and benefits. This way, the constrained problem of stop sign detection makes the task more real, and perhaps more informative.

- We lack the language to really discuss “interpretability” and “robustness to adversarial attack” so this seems like an interesting open area for research exploration. However, the papers I’ve noted here are good examples of how this can be done.

- The fact that the “masking technique” to make the adversarial attack resemble typical graffitti worked is notable: it suggests that if we constrain what the attack is to look like, at least somewhat, we can still create successful attacks. It seems like there could be many more ways to make adversarial attacks beyond the existing techniques.

Paper: Intelligence per Kilowatthour

Authors: Max Welling

Presenter: Max Welling

In this talk, Prof. Welling demonstrated a pruning algorithm with a well-tested robustness. The key idea with pruning algorithms is that you can start with a densely connected neural network, then iteratively prune some of the edges to retrain the network. Sometimes compression rates of 99.5% can be obtained.

I think the most important point in the talk comes from the slide above: “AI algorithms will be measured by the amount of intelligence they provide per killowatthour.” As the amount of data balloons, and machine learning algorithms use increasingly large numbers of parameters, we will be forced to measure our AI algorithms per unit energy. If not, I suspect many tasks on the frontiers of artificial intelligence will simply be infeasible.

Taking inspiration from Max’s talk, I noted that one way of optimizing this metric is by decreasing energy consumption while maintaining performance on a previously identified task. But another way of optimizing this is by finding new ways to measure exactly how intelligent these systems are. I suspect that approaches like that in Cynthia Rudin’s talk discussing “this looks like that” may be a way of increasing the “intelligence” part of the equation. Finding ways to energy-efficiently create stop sign detectors that are robust to adversarial graffiti-style attacks like those discussed by Dawn Song could be another way to increase the intelligence of these models.