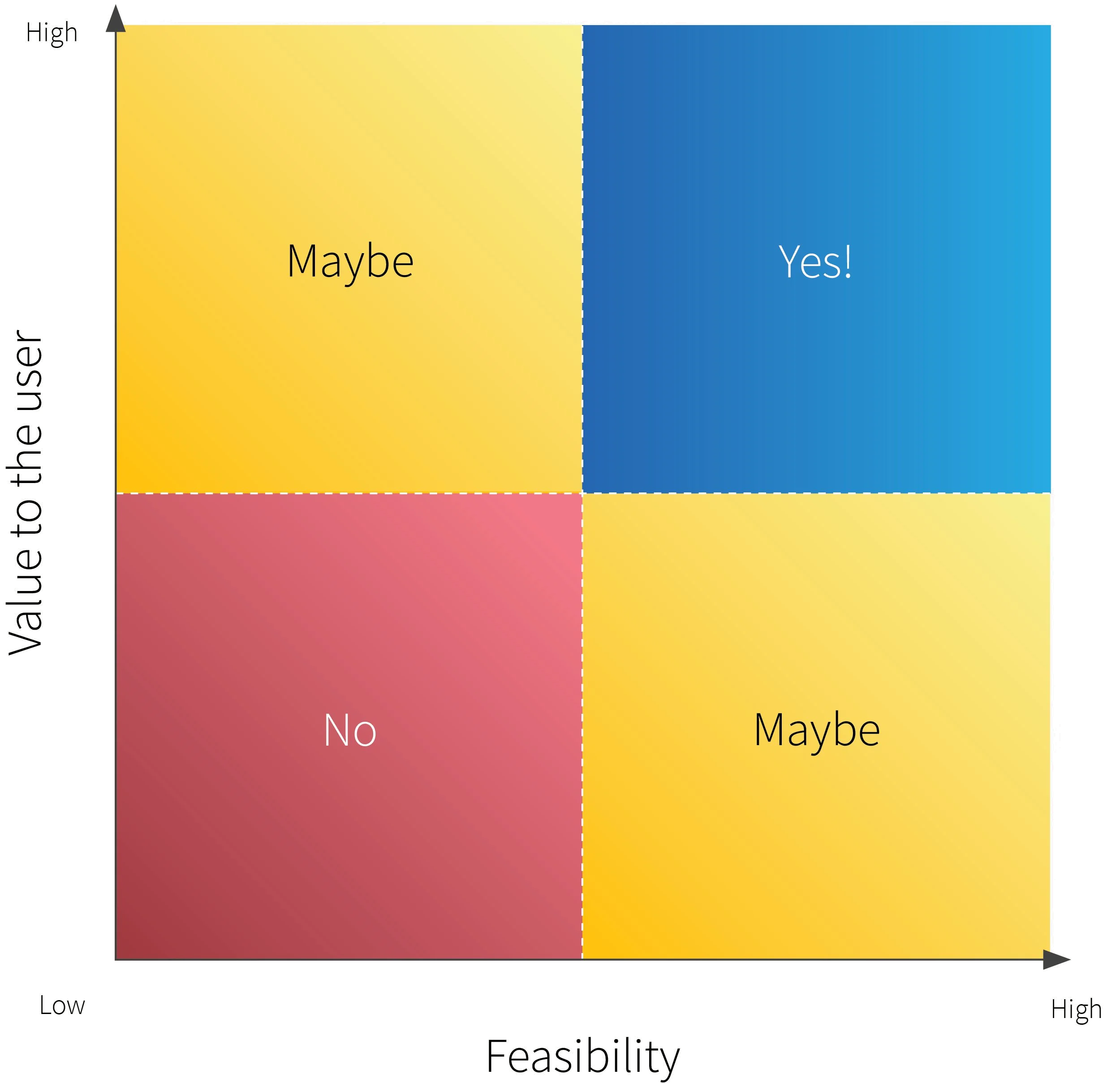

The world is full of 2×2 matrices designed to help product development teams prioritize work and decide what to build next. IBM Design’s matrix exercises, for example, plot user value against technical feasibility. Others take different approaches.

But are these simple frameworks also useful for prioritizing machine learning features? The short answer is “kind of”. But certainly not within a traditional 2-hour design session.

What’s different about machine learning?

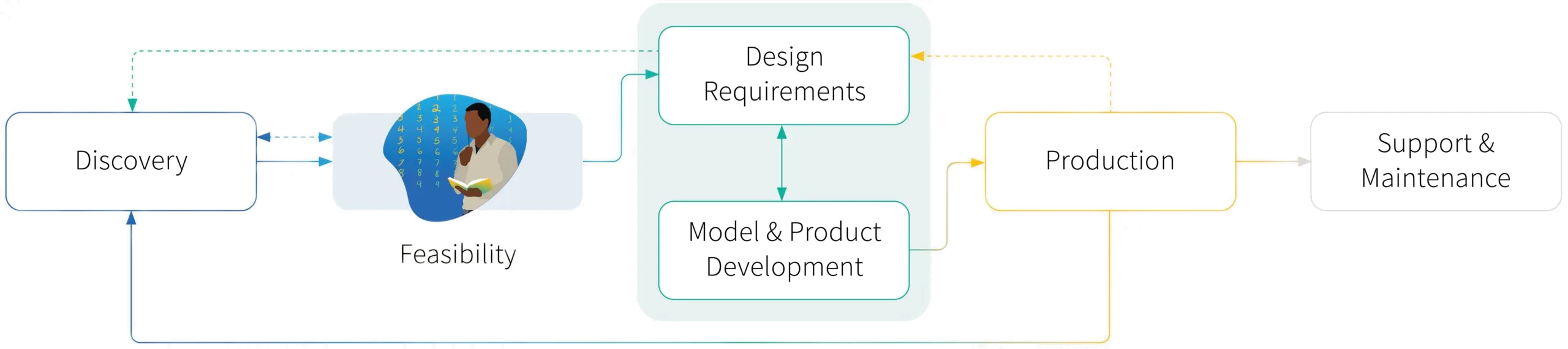

The challenge with machine learning systems is that it’s very hard for teams – including machine learning scientists – to estimate how difficult it will be to build a model or feature without first experimenting with data and building first-pass, baseline models for a task. Put simply, feasibility can’t be estimated through a one-time judgement call from a seasoned technical team: it’s an explicit, time-boxed phase in the product development lifecycle.

It’s also iterative, as the team may conclude that the original problem articulated during Discovery isn’t solvable, but a variation (perhaps a down-scoped version of the problem) is. Then the focus shifts to whether this newly-formulated task still delivers impact for the business and end-users.

Clearly, impact-feasibility analyses are still very useful for machine learning projects. But they must be viewed as living documents that evolve and solidify as the team learns more about the data, algorithms, and technical constraints of their task and problem domain.

At RBC Borealis, we call this phase a feasibility study and we use it at the beginning of our projects to help us decide whether or not to pursue a particular project and to manage the expectations of our business partners.

In this post, we’ll explore some of the more common questions faced during the feasibility study phase. And we’ll place particular focus on how you can communicate progress throughout the feasibility phase to ensure business stakeholders’ expectations are properly managed.

Not all feasibility studies are created equal

For the machine learning research team, the big questions during the feasibility study phase are how quickly they can get to a baseline model performance for the task and how quickly they can show measurable progress on that baseline.

The key to doing this well is to develop a practice of rapid experimentation, where the machine learning team can iteratively cycle through experiments using different model architectures and parameters for the task. As we discussed in a prior post about using config files to run machine learning experiments, it helps to have the right platform and tools already set up to enable reproducibility, parallelization, and collaboration between different members of the team.

At this phase in the process, the team isn’t trying to build the best architecture for the task; rather, they are trying to decide if there is a solution to the problem and if they can deliver measurable results or measurable lift above the baseline. A review of existing literature can help drive thoughtful choices about experimental design before the iteration process begins.

Nuances emerge

Naturally, no two feasibility phases will be identical. The steps and activities – even how long the phase will last – depends on the nature of the task. And there are many nuances and decisions to be made.

For example, is the task to build a brand-new ML feature? Or is it to improve on an existing algorithm? The differences related to this particular nuance (one we encounter frequently at RBC Borealis) can be significant.

When creating a brand-new ML feature, delivering value with an algorithm can often be easier … as long as there’s actually a machine learning problem to solve (as Wendy noted in her blog on discovery, sometimes the team will find that a rules-based solution – or a mix of rules-based and ML – is actually better suited to the problem). Confirming it is a problem that actually requires ML is ‘step one’ on a new build.

The second challenge is that the business won’t yet have a clear answer to many of the quantitative performance requirements the ML team needs to create a viable solution. They’ll likely have an intuitive sense for what error rate they can accept, but probably won’t be able to immediately stipulate what accuracy, precision, or recall they need from a model in order to pass it into production.

This is one of those points where great communications and collaboration comes into play. Determining the baseline becomes a dialogue between the machine learning team and the business team. The business team may become frustrated by questions they can’t answer; the lack of precision up front can frustrate the machine learning team. Product management needs to be constantly sharing results back and forth between business and technical teams to serve as a communication bridge during this process.

When improving upon an existing problem, by contrast, teams often face less ambiguity around task definition: an algorithm with baseline performance already exists. The task is to make enough measurable improvement upon this baseline to merit production. That means the real question is how to timebox initial explorations in a way that provides reasonable confidence that we can deliver a better solution.

Quick tip: If the potential delta in business impact is very high, that timebox may be quite long, sometimes up to 6-12 months. If the potential delta in business impact is lower, it’s best to keep the timebox shorter and experiment quickly with newer algorithms.

Another nuance worth noting arises when the machine learning team uses off-the-shelf trained models they can tune to the specifics of the domain and environment. With accessible (and high quality) pre-trained models, teams already know the solution will work; what they are trying to find out now is how well the models adapt to a specific domain.

Iterating on impact-value analysis and business tolerance for prediction errors

As you progress through the feasibility study phase and explore baseline model performance and architectures, you may find that you need to reframe the task. Consider, for example, an initial task of creating a cashflow prediction solution for a bank. But based on variations in data distributions, it becomes clear that it is not possible to accurately predict cash flow for all industries nine months into the future. What is possible, however, is to predict cash flow for retailers and restaurants four months into the future.

The business team then needs to decide if that down-scoped version will add sufficient value and, if so, that version may end up becoming the first minimum viable product (MVP) that is developed further in the future. If the business team decides the scaled down version does not deliver sufficient value, however, it should probably be halted so that efforts can be prioritized towards another task.

As these discussions are happening, product managers will want to take the time to work with design to understand the cost of errors in the production solution. Essentially, you want to know how frequently the system needs to be right in order to be useful. The acceptable error rate can change: a product recommendation engine, for example, will certainly have a different acceptable error rate to a banking or healthcare solution.

This is also a perfect time to be engaging with ethics and compliance to understand any governance restrictions on the model. For example, does the use case require an explainable model? If so, different architectures permit different degrees of explainability, which would impact machine learning architecture down the line. Does the model have constraints on fairness, bias, or privacy? This would shift the objective function of the task and, if constraints are high, could even impact feasibility.

The key thing to remember is that ethical questions should be asked up front, at the very beginning of the development process. It should never be left until the governance and compliance review at the end of the cycle.

Sketch the system architecture and review SLAs

While the research team explores baselines, the production engineering team should be assessing the viability of integrating the model into the downstream systems and sketching the system architecture.

RBC Borealis is part of the complex enterprise environment of RBC. And that means our engineering team must always be thinking about how they can adapt our production approach to integrate into the various data pipelines, API architectures, and down-stream applications and business process across business lines in the bank.

While not all machine learning teams will face the same challenges, here are some common questions all ML engineering teams will want to consider during the feasibility and planning phases:

- Where will outputs from the production model be served? To an internal user who will incorporate them into a business process? Or directly into a software system to power a feature?

- Who owns the required data in the upstream systems? Do they have any restrictions on how we can use it?

- Will production data be available for the system in real-time? Is data housed in multiple systems? What logic do we need to add to the outputs before they are served to the downstream user/system? (For more on data in the ML product development process, check out this blog)

- What are the security, privacy, and compliance requirements on the system?

- What SLAs are required by the downstream systems for the model outputs to be useful?

- What are the expected costs of maintaining the system in the long term (infrastructure, retraining, etc.) and how do they compare to the expected benefits?

Product management will want to incorporate answers to these questions into the final feasibility analysis, and use them to inform a decision on whether to proceed to the next step in the product lifecycle: designing production system requirements.

Conclusion

Unlike traditional development workflows, the machine learning feasibility study phase is used to dig into the data and quickly conduct experiments to establish baseline performance on a task.

This is not just about populating a 2×2 matrix: it requires constant communication between the technical teams and the business teams. And it requires project managers to iteratively reassess impact and feasibility as the teams learn more about the problem space and possibilities.

Feasibility studies also provide an excellent opportunity to manage stakeholder expectations and risk: from the very start, executives should understand that the project may not actually be feasible, or the details may morph based on data and context. But with continuous communication and transparency through the feasibility study phase, they can cut their investments short if needed, and ensure development teams are prioritizing projects that will lead to high impact and success.

We're Hiring!

We are scientists, engineers and product experts dedicated to forging new frontiers of AI in finance, backed by RBC. The team is growing! Please view the open roles, including Product Manager, Business Development Lead, Research Engineer – Data Systems, and more.

View all jobsNews

Designing machine learning for human users

News

The (never-ending) production stage

News