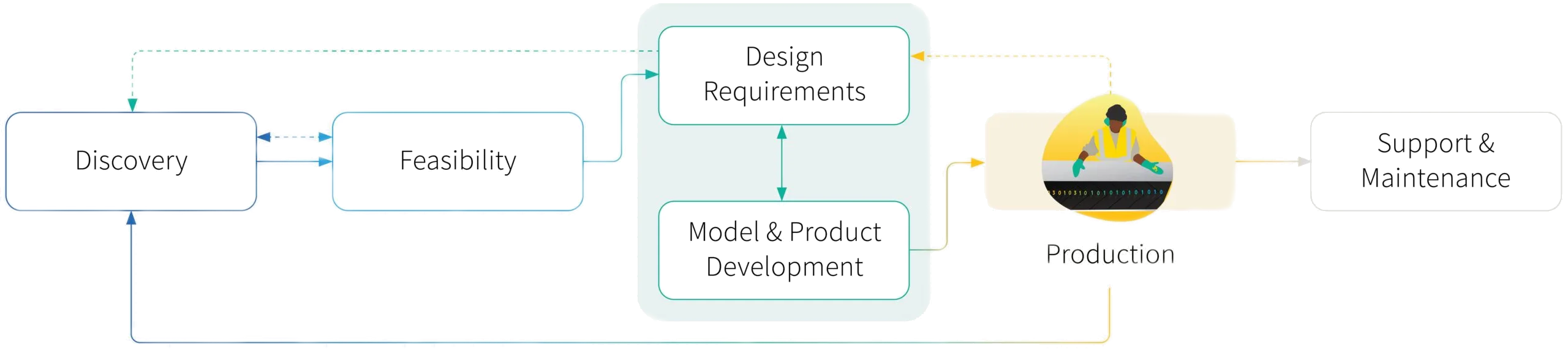

This is part 7 in our Field Guide to Machine Learning Product Development: What Product Managers Need to Know series. Read the Introduction, learn about how to manage data throughout the machine learning product lifecycle, read up on the Discovery and Feasibility phases; Design and Requirements; Model and Development; and find our thinking on the Production phase below.

The analysts at Gartner think there is an 85% chance that an average AI project will fail [1]. A few clicks over, Venturebeat puts the chance of failure even higher [2]. The risk of failure can be substantial as you get to the production stage of the AI product development lifecycle. Yet it doesn’t have to be that way.

One of the key factors behind that fail rate is that product development teams tend to put off thinking about production until way too late in the process. Product managers, especially those used to more traditional methodologies (such as agile or waterfall) tend to take their time to properly scope the problem and make sure the model works. They don’t tend to think through production in the same way, since in agile or waterfall context, production is relatively straightforward.

That’s a dangerous assumption to make in a machine learning context. Indeed, PMs should allocate more time and effort to think through how their models will actually be put into production. Doing this right can make or break projects.

In this article, we’ll explore how to set your product up for success for the production stage of the ML product development lifecycle. We’ll look at what ‘good’ looks like, what challenges you might face, and how to overcome those challenges and build the right mindset to avoid becoming one of those 85% of AI projects that fail.

Early is better than late

Let’s say your team has come up with a great model to predict which customers are going to make a purchase in the next 3 months. You proudly hand all that data over to the sales team, only to find that it will take them at least 6 months to act on that data. Simply put, the solution doesn’t match the need. The project is a failure.

Production considerations should be addressed early on in the project lifecycle. We’ve seen this happen in the industry time and again: You have teams working diligently with their heads down building something, only to find out in production that it doesn’t ‘work’ the way the business or user needs it to work. Perhaps they need to get the output in a different format. Or maybe they need it to integrate into a particular system. Or have it work on a different timeline (maybe they need something in real-time, but you planned for batch). Finding these things out at the very end of the lifecycle can be fatal to your product, and must be addressed right from the start.

Good… and the barriers to better

At its most basic, the primary objective of the production stage of the ML product lifecycle is to get the output of your model where it needs to be, in front of who needs it, at the right time. In a traditional DevOps scenario, that is a fairly linear process. Not so for ML products. As I explain a little later in this article, MLOps (the steroid-induced cousin to DevOps) is much more complicated, inherently more uncertain and extraordinarily iterative.

That is why it is so important that production activities start right at the very beginning of the product lifecycle – from the moment you start thinking about what your model is going to do. If you have read the previous articles in this Field Guide, this won’t come as a surprise (all PMs at RBC Borealis recognize and respect the importance of thinking about production at the start). But if you only realize this fact during the production stage, you will likely find yourself in trouble.

What are some of the common considerations that PMs can start thinking about early in the process? The most obvious is the alignment to the business needs and processes. And that means understanding how the outputs are going to be used within a certain strategy – understanding how the scope of your project progresses the overall strategy for the user or business.

It also means understanding the environment in which the model will be used – how will you build your data pipeline? What systems will you pull from? What systems will you write to? Do you need to provide an API? These questions need to be answered at the start to ensure that development aligns to the needs of production.

You may want to start thinking early about the privacy, compliance and legal reviews that may need to be conducted on the model. When building models within strict compliance frameworks, for example, it is critically important to conduct the proper privacy impact assessments and legal reviews. Potential challenges or additional requirements that may arise at this stage can often be dealt with early (with much less complexity and stress).

Similarly, all of RBC Borealis’s models pass through a formal model validation process where we demonstrate that the model is fair, robust, and meets all of the qualifications necessary to allow it to interact with users (whether customers or employees). Again, if we only learn in the production stage that the business requires a high level of explainability for the model, we may find ourselves losing time to additional experiments, or in the worst case, more model development.

Just as important is understanding how the product will need to integrate into the existing technology and business environment. Were we to build a new model to support customer-facing bank employees, for example, it would need to integrate into existing systems and processes across call centres and the more than 1,000 physical branches across Canada. And you’d need to train every single person on what that model does and how to use its output. Early planning can make this kind of integration much less uncertain.

It’s also worth remembering that with ML, unlike with traditional software development, production models can change very quickly. Inputs can change unexpectedly (for example, the business might decide to change vendors for a critical external data flow that feeds the model). Performance can degrade very quickly. PMs will need to think about how it will be monitored while it is in place.

Taking a different mindset

ML models are constantly changing. They need performance monitoring. They need updates. They will need retraining. Taken as a whole, these barriers and considerations add a lot of uncertainty and complexity to the production stage of the ML product lifecycle. This way of working requires evolutionary changes for PMs used to the traditional DevOps principles – you’ll need to develop an MLOps mindset to specifically fit AI systems and ML models.

An MLOps mindset covers the entire pipeline from how the data flows from its origination point through to the model outputs consumption by the end-user. It means having the foresight to account for all of the considerations outlined above and iterate as the lifecycle progresses. It means incorporating CI/CD (continuous integration, continuous delivery) to ensure changes can be made and pushed into production quickly and easily, as well as establishing a strong process for bug checking and sign-off once the project goes into production. It should also include automatic triggers that respond to changes in performance while in production.

As this illustration shows (taken from this manifesto of MLOps principles), the MLOps environment is dynamic, iterative and complex.

Plan early for success

One important indicator of success for an ML project is how early the PM starts planning for production.

My advice would be to ensure you have close collaboration right from the discovery stage to ensure you have all of your teams aligned – there is more you can do at this stage to prepare for production than you might think. And make sure that collaboration isn’t just with your business partner; include privacy, legal, compliance, validation and technology owners.

Once you are in production, it is also critically important to have the right engineering team with the right attitude, culture and capabilities to work through these challenges (like the technology integration) and to ensure that model performance is sufficient to meet the demands of the business.

Ultimately, it all comes back to the purpose of your product. It’s often easy to get caught up in the excitement of building an ML product. But if you keep your eye on the prize and always remember the specific need that you are trying to solve, you should be able to keep out of the weeds that often slow progress through production. At a macro level, every win, small and big, will contribute to the overall success rate of AI projects. As PMs, we can be the change we want to see.

References

[1] Gartner Says Nearly Half of CIOs Are Planning to Deploy Artificial Intelligence

[2] Why do 87% of data science projects never make it into production?

News

Support & Maintenance … without the ‘hand over’

News

Encouraging diversity in AI: Why RBC Borealis and RBC are supporting the AI4Good Lab

News