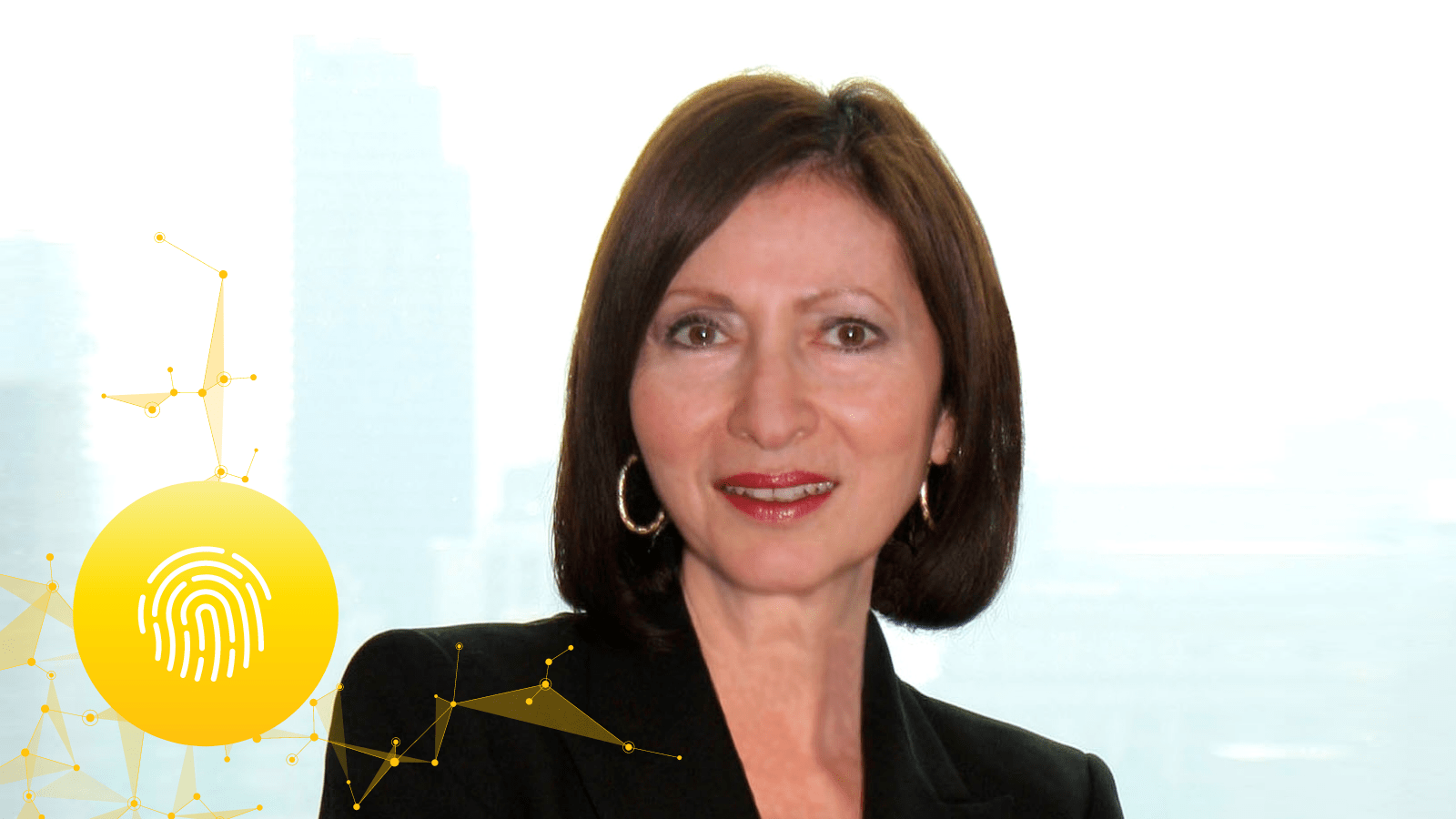

In this post, we explore the concept of ‘privacy’ with Dr. Ann Cavoukian, former 3-term Privacy Commissioner of Ontario and the Executive Director of the Global Privacy & Security by Design Centre.

The views expressed in this article are those of the interviewee and do not necessarily reflect the position of RBC or RBC Borealis.

Are current privacy laws and regulations enough to ensure data privacy in AI?

Ann Cavoukian (AC):

The challenge with many data privacy laws is that they do not reflect the dynamic and evolving nature of today’s technology. In this era of AI, social media, phishing expeditions and data leaks, I would argue that what we really need are proactive measures around privacy.

I think we are just starting to reach the tip of the iceberg on data privacy and protection. The majority of the iceberg is still unknown and, in many cases, unregulated. And that means that, rather than waiting for the safety net of regulation to kick in, we need to be thinking more about algorithmic transparency and designing privacy into the process.

I think we are just starting to reach the tip of the iceberg on data privacy and protection. The majority of the iceberg is still unknown and, in many cases, unregulated. And that means that, rather than waiting for the safety net of regulation to kick in, we need to be thinking more about algorithmic transparency and designing privacy into the process.

What do you mean by designing privacy into the AI process?

AC:

I mean baking privacy protective measures right into the code and algorithms. It’s really about designing programs and models with privacy as the default setting.

During my time as Data Privacy Commissioner for Ontario, I created ‘Privacy by Design’, a framework for helping organizations prevent privacy breaches by embedding privacy into the design process. More recently, I created an extensive module called ‘AI Ethics by Design’ which was specifically intended to deal with the need for algorithmic transparency and accountability. There are seven key principles that underpin the framework, supported by strong documentation to facilitate ethical design and data symmetry. These principles, based on the original privacy by design framework, include respect for privacy as a fundamental human right.

Do applications like Facial Recognition worry you from a privacy perspective?

AC:

Absolutely. And I’m happy to see that facial recognition tools are routinely banned in various US states and across Canada. Your face is your most sensitive personal information. And, more often than not with these applications, nobody is obtaining individual consent before capturing facial images; there may not even be visible notification that facial recognition tools are being used.

From a privacy perspective, that’s terrible. The point of privacy laws is to provide people with control over their personal data. Applications like facial recognition take away all of that control. All that aside, the technology has also proven to be highly inaccurate and frequently biased; time and again, their use has been struck down in the courts of justice and public opinion.

Is data privacy really that important to consumers? Or are they willing to trade it for convenience and service?

AC:

I think it is absolutely critical to consumers; virtually every study and survey confirms that. Consider what happened early on in the pandemic. A number of governments tried to launch so-called ‘contact tracing’ apps that offered fairly weak privacy controls. Uptake was dismal. Even though the apps could be potentially life-saving for users, few were willing to share their personal information with the government or put it into a centralized repository.

What worked well, on the other hand, was the Apple/Google exposure notification API. In part, it was well adopted because it works on the majority of smart phones in use in North America today. But, more importantly, it is fully privacy protected. I have personally had a number of 1-on-1 briefings from Apple and was highly confident that the API collected no personally identifiable information or geolocation data. Around the world, Canada included, apps based on that API have seen tremendous uptake within the population.

Now, remember, this is for an app that helps people avoid the biggest health crisis to face modern civilization. If they are not willing to trade their privacy for that, you would be crazy to assume consumers would trade it away simply for convenience or service.

Won’t that make things very restrictive for AI developers and business users?

AC:

Not at all. We need to get away from this view where privacy must be traded for something. It’s not an either/or, zero-sum proposition, involving trade-offs. Far better to enjoy multiple positive gains by embedding both privacy AND AI measures — not one to the exclusion of the other.

I also think the environment is rapidly changing. Consider, for example, the efforts being made by the Decentralized Identify Foundation, a global technology consortium that is working to find new ways to ensure privacy while allowing data to be commercialized. Efforts like these suggest we are moving towards a world where privacy can be embedded into AI by default.

What is your advice for the AI and business community?

AC:

The AI community needs to remember that – above all – transparency is essential. People need to be able to see that their privacy has been baked into the code and program by design. I would argue that public trust in AI is currently very low. The only way to build that trust is by embedding privacy by design.

I think the same advice goes for business executives and privacy oversight leaders: don’t just accept algorithms without looking under the hood first. There are all kinds of potential issues – privacy and ethics related – that can arise when applying AI. As an executive, you need to be sure your organization and people are always striving to protect personal data.

About Dr. Ann Cavoukian

Dr. Ann Cavoukian is recognized as one of the world’s leading privacy experts. Appointed as the Information and Privacy Commissioner of Ontario, Canada in 1997, Dr. Cavoukian served an unprecedented three terms as Commissioner. There she created Privacy by Design, a framework that seeks to proactively embed privacy into the design specifications of information technologies, networked infrastructure and business practices, thereby achieving the strongest protection possible. Dr. Cavoukian is presently the Executive Director of the Global Privacy and Security by Design Centre.