Getting up to speed with the latest Machine Learning concepts doesn’t have to be complicated.

While there’s an abundance of introductory courses and tutorials on the basics, it can be harder to dive deeper and learn foundational concepts quickly. This post includes a round-up of our top 20 AI and Machine Learning tutorials, as well as recommended readings, on topics ranging from bias and fairness in AI, few-shot learning and meta-learning, auxiliary tasks in deep reinforcement learning, variational autoencoders, and more.

Tutorial #1: bias and fairness in AI

Author(s): S. Prince

This tutorial discusses how bias can be introduced into the machine learning pipeline, what it means for a decision to be fair, and methods to remove bias and ensure fairness. As machine learning algorithms are increasingly used to determine important real-world outcomes such as loan approval, pay rates, and parole decisions, it is incumbent on the AI community to minimize unintentional unfairness and discrimination.

📖 Further Reading:

- Fairness and machine learning Limitations and Opportunities by Solon Barocas, Moritz Hardt, Arvind Narayanan

- Data Decisions and Theoretical Implications when Adversarially Learning Fair Representations by Alex Beutel, Jilin Chen, Zhe Zhao, Ed H. Chi

- A comparative study of fairness-enhancing interventions in machine learning by Sorelle A. Friedler, Carlos Scheidegger, Suresh Venkatasubramanian, Sonam Choudhary, Evan P. Hamilton, Derek Rot

Tutorial #2: few-shot learning and meta-learning I

Author(s): W. Zi, L. S. Ghoraie, S. Prince

This tutorial describes few-shot and meta-learning problems and introduces a classification of methods. We also discuss methods that use a series of training tasks to learn prior knowledge about the similarity and dissimilarity of classes that can be exploited for future few-shot tasks.

📖 Further Reading:

- Matching Networks for One Shot Learning by Oriol Vinyals, Charles Blundell, Timothy Lillicrap, Koray Kavukcuoglu, Daan Wierstra

- Prototypical Networks for Few-shot Learning by Jake Snell, Kevin Swersky, Richard S. Zemel

- Meta-learning with memory-augmented neural networks by Adam Santoro, Sergey Bartunov, Matthew Botvinick, Dann Wierstra, Timothy Lillicrap

Tutorial #3: few-shot learning and meta-learning II

Author(s): W.Zi, L. S. Ghoraie, S. Prince

In part II of our tutorial on few-shot and meta-learning, we discuss methods that incorporate prior knowledge about how to learn models and that incorporate prior knowledge about the data itself. These include three distinct approaches “learning to initialize”, “learning to optimize” and “sequence methods”.

📖 Further Reading:

- Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks by Chelsea Finn, Pieter Abbeel, Sergey Levine

- A Simple Neural Attentive Meta-Learner by Nikhil Mishra, Mostafa Rohaninejad, Xi Chen, Pieter Abbeel

- Low-Shot Learning from Imaginary Data by Yu-Xiong Wang, Ross Girshick, Martial Hebert, Bharath Hariharan

Tutorial #4: auxiliary tasks in deep reinforcement learning

Author(s): P. Hernandez-Leal, B. Kartal, M. E. Taylor

This tutorial focuses on the use of auxiliary tasks to improve the speed of learning in the context of deep reinforcement learning (RL). Auxiliary tasks are additional tasks that are learned simultaneously with the main RL goal and that generate a more consistent learning signal. The system uses these signals to learn a shared representation and hence speed up the progress on the main RL task. Additionally, examples from a variety of domains are explored.

📖 Further Reading:

- Terminal Prediction as an Auxiliary Task for Deep Reinforcement Learning by Bilal Kartal, Pablo Hernandez-Leal, Matthew E. Taylor

- Reinforcement Learning with Unsupervised Auxiliary Tasks by Max Jaderberg, Volodymyr Mnih, Wojciech Marian Czarnecki, Tom Schaul, Joel Z Leibo, David Silver, Koray Kavukcuoglu

- Adapting Auxiliary Losses Using Gradient Similarity by Yunshu Du, Wojciech M. Czarnecki, Siddhant M. Jayakumar, Mehrdad Farajtabar, Razvan Pascanu, Balaji Lakshminarayanan

Tutorial #5: variational autoencoders

Author(s): S. Prince

In this tutorial, we discuss latent variable models in general and then the specific case of the non-linear latent variable model. We’ll see that maximum likelihood learning of this model is not straightforward, but we can define a lower bound on the likelihood. We then show how the autoencoder architecture can approximate this bound using a Monte Carlo (sampling) method. To maximize the bound, we need to compute derivatives, but unfortunately, it’s not possible to compute the derivative of the sampling component. We’ll show how to side-step this problem using the reparameterization trick. Finally, we’ll discuss extensions of the VAE and some of its drawbacks.

📖 Further Reading:

- Auto-Encoding Variational Bayes by Diederik P Kingma, Max Welling

- Variational Inference with Normalizing Flows by Danilo Jimenez Rezende, Shakir Mohamed

- beta-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework by Irina Higgins, Loic Matthey, Arka Pal, Christopher Burgess, Xavier Glorot, Matthew Botvinick, Shakir Mohamed, Alexander Lerchner

Tutorial #6: neural natural language generation – decoding algorithms

Authors: L. El Asri, S. Prince

Neural natural language generation (NNLG) refers to the problem of generating coherent and intelligible text using neural networks. Example applications include response generation in dialogue, summarization, image captioning, and question answering. In this tutorial, we assume that the generated text is conditioned on an input. For example, the system might take a structured input like a chart or table and generate a concise description. Alternatively, it might take an unstructured input like a question in text form and generate an output which is the answer to this question.

📖 Further Reading:

- The Curious Case of Neural Text Degeneration by Ari Holtzman, Jan Buys, Li Du, Maxwell Forbes, Yejin Choi

Tutorial #7: neural natural language generation – sequence level training

Authors: L. El Asri, S. Prince

In this tutorial, we consider alternative training approaches that compare the complete generated sequence to the ground truth at the sequence level. We’ll consider two families of methods; in the first, we take models that have been trained using the maximum likelihood criterion and fine-tune them with a sequence-level cost function — we’ll consider using both reinforcement learning and minimum risk training for this fine-tuning.

📖 Further Reading:

- A Comprehensive Survey on Safe Reinforcement Learning by Javier Garcia and Fernando Fernandez

- Ordered Neurons: Integrating Tree Structures into Recurrent Neural Networks by Yikang Shen, Shawn Tan, Alessandro Sordoni, Aaron Courville

Tutorial #8: Bayesian optimization

Author(s): M. O. Ahmed, S. Prince

In this tutorial, we dive into Bayesian optimization, its key components, and applications. Optimization is at the heart of machine learning; Bayesian optimization specifically is a framework that can deal with many optimization problems that will be discussed. The core idea is to build a model of the entire function that we are optimizing. This model includes both our current estimate of that function and the uncertainty around that estimate. By considering this model, we can choose where next to sample the function. Then we update the model based on the observed sample. This process continues until we are sufficiently certain of where the best point on the function is.

📖 Further Reading:

- Practical Bayesian Optimization of Machine Learning Algorithms by Jasper Snoek, Hugo Larochelle, Ryan P. Adams

- Sequential Model-Based Optimization for General Algorithm Configuration by Frank Hutter, Holger H. Hoos and Kevin Leyton-Brown

- Algorithms for Hyper-Parameter Optimization by James Bergstra, Remi Bardenet, Yoshua Bengio, Balazs Kegl

Tutorial #9: SAT Solvers I: Introduction and Applications

Author(s): S. Prince

This tutorial concerns the Boolean satisfiability or SAT problem. We are given a formula containing binary variables that are connected by logical relations such as OR and AND. We aim to establish whether there is any way to set these variables so that the formula evaluates to true. Algorithms that are applied to this problem are known as SAT solvers.

📖 Further Reading:

- CSC410 tutorial: solving SAT problems with Z3 by University of Toronto, Computer Science Department

- SAT/SMT by Example by Dennis Yurichev

Tutorial #10: SAT Solvers II: Algorithms

Author(s): S. Prince, C. Srinivasa

In this tutorial we focus exclusively on the SAT solver algorithms that are applied to this problem. We’ll start by introducing two ways to manipulate Boolean logic formulae. We’ll then exploit these manipulations to develop algorithms of increasing complexity. We’ll conclude with an introduction to conflict-driven clause learning which underpins most modern SAT solvers.

📖 Further Reading:

- Handbook of Satisfiability by H. Van Maaren, T. Walsh, A. Biere, and M. Heule

- The Art of Computer Programming, Volume 4, Fascicle 6: Satisfiability by Donald E. Knuth

Tutorial #11: SAT Solvers III: Factor graphs and SMT solvers

Author(s): S. Prince, C. Srinivasa

This third and final tutorial of our SAT solvers series is divided into two sections, each of which is self-contained. First, we consider a completely different approach to solving satisfiability problems which are based on factor graphs. Second, we will discuss methods that allow us to apply the SAT machinery to problems with continuous variables.

📖 Further Reading:

- Information, Physics, and Computation by Marc Mézard and Andrea Montanari

Tutorial #12: Differential Privacy I: Introduction

Author(s): M. Brubaker, S. Prince

In this two-part tutorial, we explore the issue of privacy in machine learning. In part I, we discuss definitions of privacy in data analysis and cover the basics of differential privacy. Part II considers the problem of how to perform machine learning with differential privacy.

📖 Further Reading:

- The Algorithmic Foundations of Differential Privacy by Cynthia Dwork and Aaron Roth

Tutorial #13: Differential Privacy II: machine learning and data generation

Author(s): G. Sharma, N. Hegde, S. Prince, M. Brubaker

In this tutorial, we present recent methods for making machine learning differentially private. We will also discuss differentially private methods for generative modelling, which provide an enticing solution to a seemingly intractable problem: how can we release data for general use while still protecting privacy?

📖 Further Reading:

- RESPECT AI: Improving the efficiency of Differential Privacy by RBC Borealis

- Differential Privacy: The balance between innovation and data protection by F. Agrafioti and A. LaPlante

Tutorial #14: Transformers I: Introduction

Author(s): S. Prince

This tutorial introduces self-attention, which is the core mechanism that underpins the transformer architecture. We then describe transformers themselves and how they can be used as encoders, decoders, or encoder-decoders using well-known examples such as BERT and GPT3. This discussion will be suitable for someone who knows machine learning, but who is not familiar with the transformer.

📖 Further Reading:

- Attention Is All You Need by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, Illia Polosukhin

Tutorial #15: Parsing I context-free grammars and the CYK algorithm

Author(s): A. Kadar, S. Prince

In this tutorial, we review earlier work that models grammatical structure. We introduce the CYK algorithm, which finds the underlying syntactic structure of sentences and forms the basis of many algorithms for linguistic analysis. The algorithms are elegant and interesting for their own sake. However, we also believe that this topic remains important in the age of large transformers. We hypothesize that the future of NLP will consist of merging flexible transformers with linguistically informed algorithms to achieve systematic and compositional generalization in language processing.

📖 Further Reading:

- Lecture 9: The CKY Parsing Algorithm by Julia Hockenmaier

Tutorial #16: Transformers II: Extensions

Author(s): S. Prince

For this tutorial we’ll focus on two families of modifications that address limitations of the basic architecture and draw connections between transformers and other models. This blog will be suitable for someone who knows how transformers work, and wants to know more about subsequent developments.

📖 Further Reading:

- Do Transformer Modifications Transfer Across Implementations and Applications? by Sharan Narang, Hyung Won Chung, Yi Tay, William Fedus, Thibault Fevry, Michael Matena, Karishma Malkan, Noah Fiedel, Noam Shazeer, Zhenzhong Lan, Yanqi Zhou, Wei Li, Nan Ding, Jake Marcus, Adam Roberts, Colin Raffel

Tutorial #17: Transformers III Training

Author(s): P. Xu, S. Prince

This Tutorial discusses the challenges with transformer training dynamics and introduces some of the tricks that practitioners use to get transformers to converge. This discussion will be suitable for researchers who already understand transformer architecture and who are interested in training transformers and similar models from scratch.

📖 Further Reading:

- Optimizing Deeper Transformers on Small Datasets by Peng Xu, Dhruv Kumar, Wei Yang, Wenjie Zi, Keyi Tang, Chenyang Huang, Jackie Chi Kit Cheung, Simon J.D. Prince, Yanshuai Cao

- On the Variance of the Adaptive Learning Rate and Beyond by Liyuan Liu, Haoming Jiang, Pengcheng He, Weizhu Chen, Xiaodong Liu, Jianfeng Gao, Jiawei Han

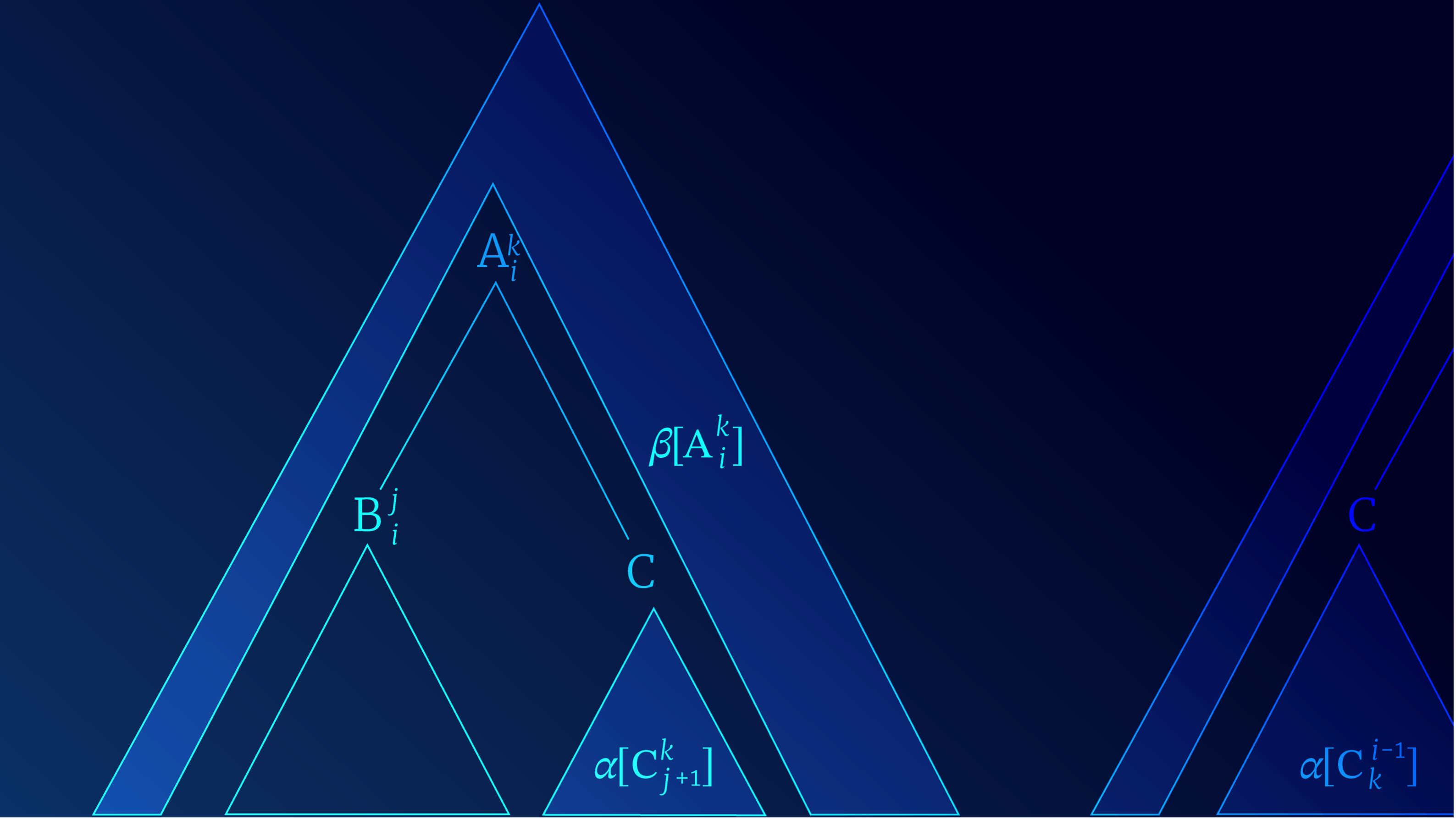

Tutorial #18: Parsing II: WCFGs, the inside algorithm, and weighted parsing

Author(s): A. Kadar, S. Prince

We introduce weighted context-free grammars or WCFGs. These assign a non-negative weight to each rule in the grammar. From here, we can assign a weight to any parse tree by multiplying the weights of its component rules together. We present two variations of the CYK algorithm that apply to WCFGs. (i) The inside algorithm computes the sum of the weights of all possible analyses (parse trees) for a sentence. (ii) The weighted parsing algorithm finds the parse tree with the highest weight.

Tutorial #19: Parsing III: PCFGs and the inside-outside algorithm

Author(s): A. Kadar, S. Prince

This Tutorial covers probabilistic context-free grammars or PCFGs, which are are a special case of WCFGs. They are featured more than WCFGs in the earlier statistical NLP literature and in most teaching materials. As the name suggests, they replace the rule weights with probabilities. We will treat these probabilities as model parameters and describe algorithms to learn them for both the supervised and the unsupervised cases. The latter is tackled by expectation-maximization and leads us to develop the inside-outside algorithm which computes the expected rule counts that are required for the EM updates.

📖 Further Reading:

- Self-Training for Unsupervised Parsing with PRPN by Anhad Mohananey, Katharina Kann, Samuel R. Bowman

- Unsupervised Discourse Constituency Parsing Using Viterbi EM by Noriki Nishida, Hideki Nakayama

Tutorial #20: Understanding XLNet

Authors: G. McGoldrick, Y. Cao, S. Prince

This tutorial provides an overview of XLNet, an auto-regressive language model designed for natural language processing tasks. XLNet combines the transformer architecture with recurrence, allowing for bidirectional context learning. The article includes a technical overview of XLNet’s pre-training and fine-tuning procedures, as well as its performance on benchmark datasets.

📖 Further Reading:

- TPUs vs GPUs for Transformers (BERT) by Tim Dettmers

- Neural Autoregressive Distribution Estimation by Benigno Uria, Marc-Alexandre Côté, Karol Gregor, Iain Murray, Hugo Larochelle

Work with us!

Impressed by the work of the team?

RBC Borealis is looking to hire for various roles across different teams. Visit our career page now to find the right role for you and join our team!

Careers at RBC Borealis